Scrapy对接Pyppeteer

发布时间:2025-04-22 10:42:01编辑:123阅读(2164)

在Scrapy框架中整合Pyppeteer(一个基于Chrome的无头浏览器,用于自动化网页渲染)可以让你执行更复杂的JavaScript渲染任务,这对于爬取依赖于JavaScript动态加载内容的网站非常有用.

Scrapy和Pyppeteer的对接方式和它是基本一致的。自定义一个Downloader Middleware并实现process_request方法,在process_request中直接获取Requess对象的URL,然后在process_request方法中完成使用Pyppeteer请求URL的过程,获取JavaScript渲染后的HTML代码,最后把HTML代码构造为HtmlResponse返回。这样,HtmlResponse就会被传给Spider,Spider拿到的结果就是JavaScript渲染后的结果了。

Pyppeteer需要借助asynico实现异步爬取,也就是说调用的必须是async修饰的方法。虽然Scrapy也支持异步,但其异步是基于Twisted实现的,二者怎么实现兼容呢?从Scrapy2.0版本开始已经可以支持asyncio了。Twisted的异步对象叫作Deffered,而asyncio里面的异步对象叫Future,其支持的原理就是实现了Future到Deffered的转换,代码如下:

import asyncio from twisted.internet.defer import Deferred def as_deferred(f): return Deferred.fromFuture(asyncio.ensure_future(f))

Scrapy安装

pip install scrapy -i https://mirrors.aliyun.com/pypi/simple

Scrapy提供了一个fromFuture方法,它可以接收一个Future对象,返回一个Deffered对象,另外还需要更换Twisted的Reactor对象,在Scrapy的settings.py中需要添加如下代码:

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

对接Pyppeteer实现

pyppeteer安装(注意pyppeteer的版本1.02,2.0.0版本下载chrome-win32.zip会找不到文件 )

pip install pyppeteer=1.0.2 -i https://mirrors.aliyun.com/pypi/simple

创建一个新的项目,叫做scrapy_pyppeteer_jd,命令如下:

scrapy startproject scrapy_pyppeteer_demo

进入项目,然后新建一个spider,名称为jd_spider,命令如下:

scrapy genspider book scrape.center

同样地,首先定义Item对象,名称为BookItem,代码如下所示:

import scrapy class ScrapyPyppeteerDemoItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() tags = scrapy.Field() score = scrapy.Field() cover = scrapy.Field() price = scrapy.Field()

接着定义主要的爬取逻辑,包括初始请求,解析列表页,解析详情页。初始请求start_requests的代码定义如下:

class BookSpider(Spider):

name = 'book'

allowed_domains = ['spa5.scrape.center']

base_url = 'https://spa5.scrape.center'

def start_requests(self):

"""

first page

:return:

"""

start_url = f'{self.base_url}/page/1'

logger.info('crawling %s', start_url)

yield Request(start_url, callback=self.parse_index)在start_requests方法里面构造了第一页的爬取请求并返回,回调方法指定为parse_index。parse_index方法自然就是实现列表页的解析,得到详情页的一个个URL,与此同时还要解析下一页的URL。

def parse_index(self, response):

"""

extract books and get next page

:param response:

:return:

"""

items = response.css('.item')

for item in items:

href = item.css('.top a::attr(href)').extract_first()

detail_url = response.urljoin(href)

yield Request(detail_url, callback=self.parse_detail, priority=2)

# next page

match = re.search(r'page/(\d+)', response.url)

if not match: return

page = int(match.group(1)) + 1

next_url = f'{self.base_url}/page/{page}'

yield Request(next_url, callback=self.parse_index)在parse_index方法中实现了两部分逻辑。第一部分逻辑是解析每一本书对应的详情页URL,然后构造新的Request并返回,将回调方法设置为parse_detail方法,并设置优先级为2;另一部分逻辑就是获取当前列表页的页码,然后将其加1,构造下一页的URL,构造新的Request并返回,将回调方法设置为parse_index方法。

那最后的逻辑就是parse_detail方法,即解析详情页提取最终结果的逻辑了,这个方法里面需要将书名,标签,评分,封面,价格都提取出来,然后构造Item并返回,代码如下:

def parse_detail(self, response):

"""

process detail info of book

:param response:

:return:

"""

name = response.css('.name::text').extract_first()

tags = response.css('.tags button span::text').extract()

score = response.css('.score::text').extract_first()

price = response.css('.price span::text').extract_first()

cover = response.css('.cover::attr(src)').extract_first()

tags = [tag.strip() for tag in tags] if tags else []

score = score.strip() if score else None

item = ScrapyPyppeteerDemoItem(name=name, tags=tags, score=score, price=price, cover=cover)

yield item利用Downloader Middleware实现与Pyppeteer的对接。新建一个PyppeteerMiddleware,实现如下:

from pyppeteer import launch

from scrapy.http import HtmlResponse

import asyncio

import logging

from twisted.internet.defer import Deferred

logging.getLogger('websocket').setLevel('INFO')

logging.getLogger('pyppeteer').setLevel('INFO')

def as_deferred(f):

return Deferred.fromFuture(asyncio.ensure_future(f))

class PyppeteerMiddleware(object):

async def _process_request(self, request, spider):

browser = await launch(headless=True)

page = await browser.newPage()

pyppeteer_response = await page.goto(request.url)

await asyncio.sleep(3)

html = await page.content()

pyppeteer_response.headers.pop('content-encoding', None)

pyppeteer_response.headers.pop('Content-Encoding', None)

response = HtmlResponse(page.url, status=pyppeteer_response.status,

headers=pyppeteer_response.headers,

body=str.encode(html),

encoding='utf-8',

request=request)

await page.close()

await browser.close()

return response

def process_request(self, request, spider):

return as_deferred(self._process_request(request, spider))修改settings.py配置如下:

ROBOTSTXT_OBEY = False

CONCURRENT_REQUESTS = 3

DOWNLOADER_MIDDLEWARES = {

"scrapy_pyppeteer_demo.middlewares.PyppeteerMiddleware": 543,

}

运行Spider

scrapy crawl book

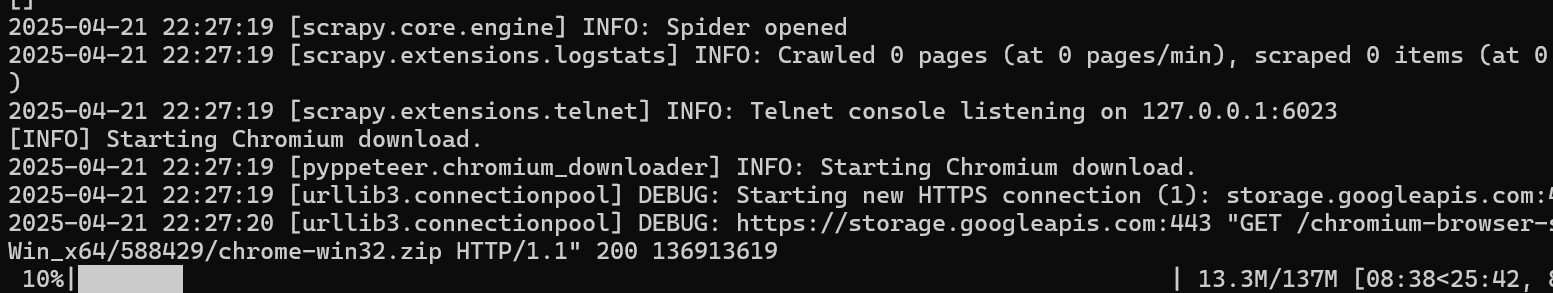

第一次运行,pyppeteer会下载chrome-win32.zip文件

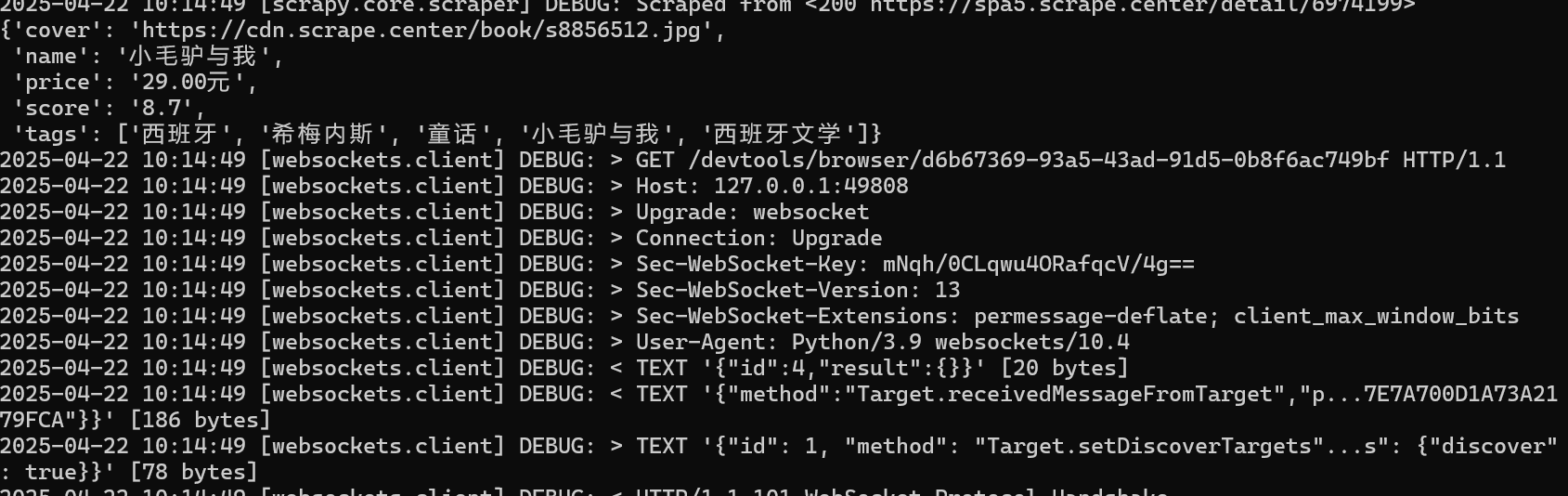

可以看到spider在运行过程中,与Pyppeteer对应的Chromium浏览器出来并加载了对应的页面,控制台输出如下:

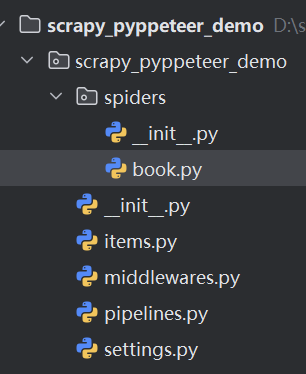

项目目录:

book.py完整代码

import logging

import re

from scrapy import Request, Spider

from scrapy_pyppeteer_demo.items import ScrapyPyppeteerDemoItem

logger = logging.getLogger(__name__)

class BookSpider(Spider):

name = 'book'

allowed_domains = ['spa5.scrape.center']

base_url = 'https://spa5.scrape.center'

def start_requests(self):

"""

first page

:return:

"""

start_url = f'{self.base_url}/page/1'

logger.info('crawling %s', start_url)

yield Request(start_url, callback=self.parse_index)

def parse_index(self, response):

"""

extract books and get next page

:param response:

:return:

"""

items = response.css('.item')

for item in items:

href = item.css('.top a::attr(href)').extract_first()

detail_url = response.urljoin(href)

yield Request(detail_url, callback=self.parse_detail, priority=2)

# next page

match = re.search(r'page/(\d+)', response.url)

if not match: return

page = int(match.group(1)) + 1

next_url = f'{self.base_url}/page/{page}'

yield Request(next_url, callback=self.parse_index)

def parse_detail(self, response):

"""

process detail info of book

:param response:

:return:

"""

name = response.css('.name::text').extract_first()

tags = response.css('.tags button span::text').extract()

score = response.css('.score::text').extract_first()

price = response.css('.price span::text').extract_first()

cover = response.css('.cover::attr(src)').extract_first()

tags = [tag.strip() for tag in tags] if tags else []

score = score.strip() if score else None

item = ScrapyPyppeteerDemoItem(name=name, tags=tags, score=score, price=price, cover=cover)

yield itemitems.py完整代码

import scrapy class ScrapyPyppeteerDemoItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() tags = scrapy.Field() score = scrapy.Field() cover = scrapy.Field() price = scrapy.Field()

middlewares.py完整代码

from pyppeteer import launch

from scrapy.http import HtmlResponse

import asyncio

import logging

from twisted.internet.defer import Deferred

logging.getLogger('websocket').setLevel('INFO')

logging.getLogger('pyppeteer').setLevel('INFO')

def as_deferred(f):

return Deferred.fromFuture(asyncio.ensure_future(f))

class PyppeteerMiddleware(object):

async def _process_request(self, request, spider):

browser = await launch(headless=True)

page = await browser.newPage()

pyppeteer_response = await page.goto(request.url)

await asyncio.sleep(3)

html = await page.content()

pyppeteer_response.headers.pop('content-encoding', None)

pyppeteer_response.headers.pop('Content-Encoding', None)

response = HtmlResponse(page.url, status=pyppeteer_response.status,

headers=pyppeteer_response.headers,

body=str.encode(html),

encoding='utf-8',

request=request)

await page.close()

await browser.close()

return response

def process_request(self, request, spider):

return as_deferred(self._process_request(request, spider))至此借助了Pyppeteer实现了Scrapy对JavaScript渲染页面的爬取。

上一篇: scrapy数据保存为excel

下一篇: 没有了

- openvpn linux客户端使用

51666

- H3C基本命令大全

51297

- openvpn windows客户端使用

41755

- H3C IRF原理及 配置

38528

- Python exit()函数

33008

- openvpn mac客户端使用

30020

- python全系列官方中文文档

28699

- python 获取网卡实时流量

23674

- 1.常用turtle功能函数

23599

- python 获取Linux和Windows硬件信息

21957

- Python搭建一个RAG系统(分片/检索/召回/重排序/生成)

2139°

- Browser-use:智能浏览器自动化(Web-Agent)

2847°

- 使用 LangChain 实现本地 Agent

2372°

- 使用 LangChain 构建本地 RAG 应用

2315°

- 使用LLaMA-Factory微调大模型的function calling能力

2845°

- 复现一个简单Agent系统

2329°

- LLaMA Factory-Lora微调实现声控语音多轮问答对话-1

3114°

- LLaMA Factory微调后的模型合并导出和部署-4

5100°

- LLaMA Factory微调模型的各种参数怎么设置-3

4934°

- LLaMA Factory构建高质量数据集-2

3527°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江