使用 LangChain 构建本地 RAG 应用

发布时间:2025-08-12 12:35:35编辑:Run阅读(2448)

RAG 介绍

LLM会产生误导性的 “幻觉”,依赖的信息可能过时,处理特定知识时效率不高,缺乏专业领域的深度洞察,同时在推理能力上也有所欠缺。

正是在这样的背景下,检索增强生成技术(Retrieval-Augmented Generation,RAG)应时而生,成为 AI 时代的一大趋势。

RAG 通过在语言模型生成答案之前,先从广泛的文档数据库中检索相关信息,然后利用这些信息来引导生成过程,极大地提升了内容的准确性和相关性。RAG 有效地缓解了幻觉问题,提高了知识更新的速度,并增强了内容生成的可追溯性,使得大型语言模型在实际应用中变得更加实用和可信。

RAG的基本结构有哪些呢?

要有一个向量化模块,用来将文档片段向量化。

要有一个文档加载和切分的模块,用来加载文档并切分成文档片段。

要有一个数据库来存放文档片段和对应的向量表示。

要有一个检索模块,用来根据 Query (问题)检索相关的文档片段。

要有一个大模型模块,用来根据检索出来的文档回答用户的问题。

梳理一下 RAG 的流程是什么样的呢?

索引:将文档库分割成较短的 Chunk,并通过编码器构建向量索引。

检索:根据问题和 chunks 的相似度检索相关文档片段。

生成:以检索到的上下文为条件,生成问题的回答。

环境设置

安装本地嵌入、向量存储和模型推理所需的包

# langchain_community

pip install -qU langchain langchain_community

# Chroma

pip install -qU langchain_chroma

# Ollama

pip install -qU langchain_ollama

文档加载

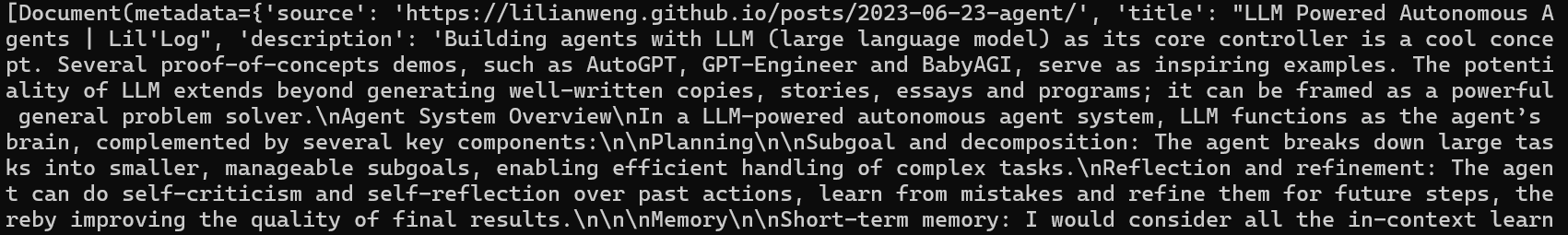

加载并分割一个示例文档。将以的关于Agent 的 博客 为例。

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

print(all_splits)

接着,初始化向量存储。 使用的文本嵌入模型是 nomic-embed-text 。

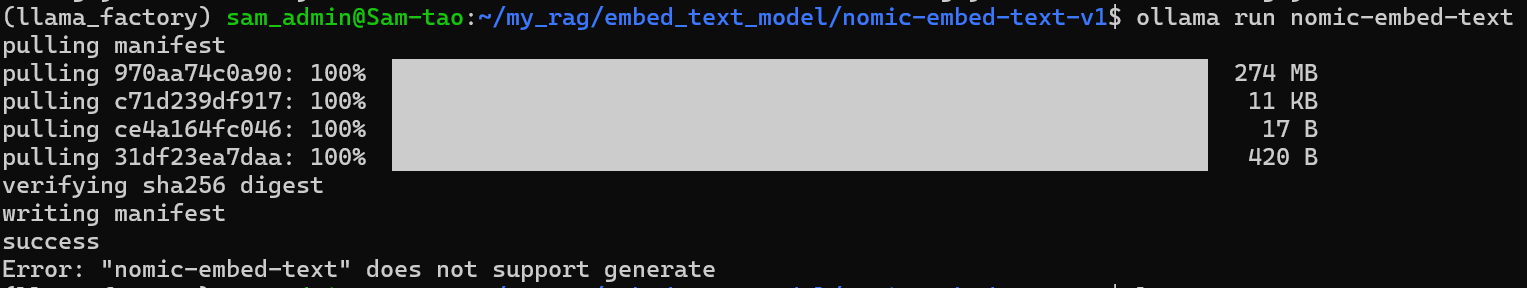

使用ollama下载nomic-embed-text模型。

当使用ollama run nomic-embed-text命令生成文本时,会遇到错误提示:“Error: "nomic-embed-text" does not support generate”。

错误原因:该错误表明用户尝试执行了一个nomic-embed-text模型不支持的操作,即生成文本。该模型专为生成文本嵌入设计,不具备文本生成功能。

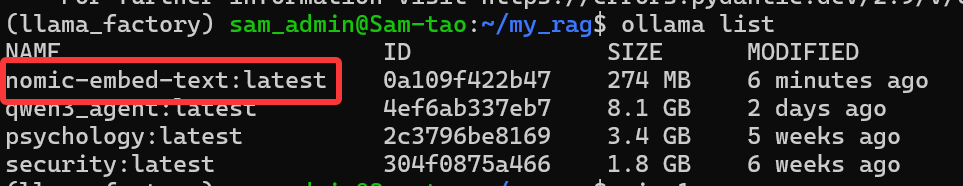

查看ollama模型列表

现在得到了一个本地的向量数据库! 来简单测试一下相似度检索:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_chroma import Chroma

from langchain_ollama import OllamaEmbeddings

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

local_embeddings = OllamaEmbeddings(model="nomic-embed-text:latest")

vectorstore = Chroma.from_documents(documents=all_splits, embedding=local_embeddings)

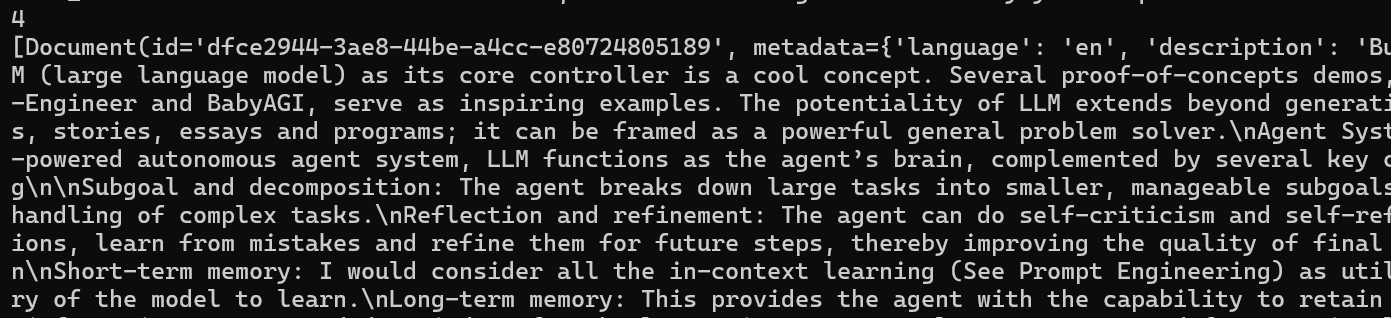

question = "任务分解的方法有哪些?"

docs = vectorstore.similarity_search(question)

print(len(docs))

print(docs)

实例化大语言模型 qwen3_agent:latest并测试模型推理是否正常:

from langchain_ollama import ChatOllama model = ChatOllama( reasoning=True, model="psychology:latest", ) response_message = model.invoke( "怎么提高RAG的准确率?" ) print(response_message.content)

构建 Chain 表达形式

可以通过传入检索到的文档和简单的 prompt 来构建一个 summarization chain 。

它使用提供的输入键值格式化提示模板,并将格式化后的字符串传递给指定的模型:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_chroma import Chroma

from langchain_ollama import OllamaEmbeddings

from langchain_ollama import ChatOllama

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

local_embeddings = OllamaEmbeddings(model="nomic-embed-text")

vectorstore = Chroma.from_documents(documents=all_splits, embedding=local_embeddings)

model = ChatOllama(

model="psychology:latest",

)

prompt = ChatPromptTemplate.from_template(

"Summarize the main themes in these retrieved docs: {docs}"

)

# 将传入的文档转换成字符串的形式

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

chain = {"docs": format_docs} | prompt | model | StrOutputParser()

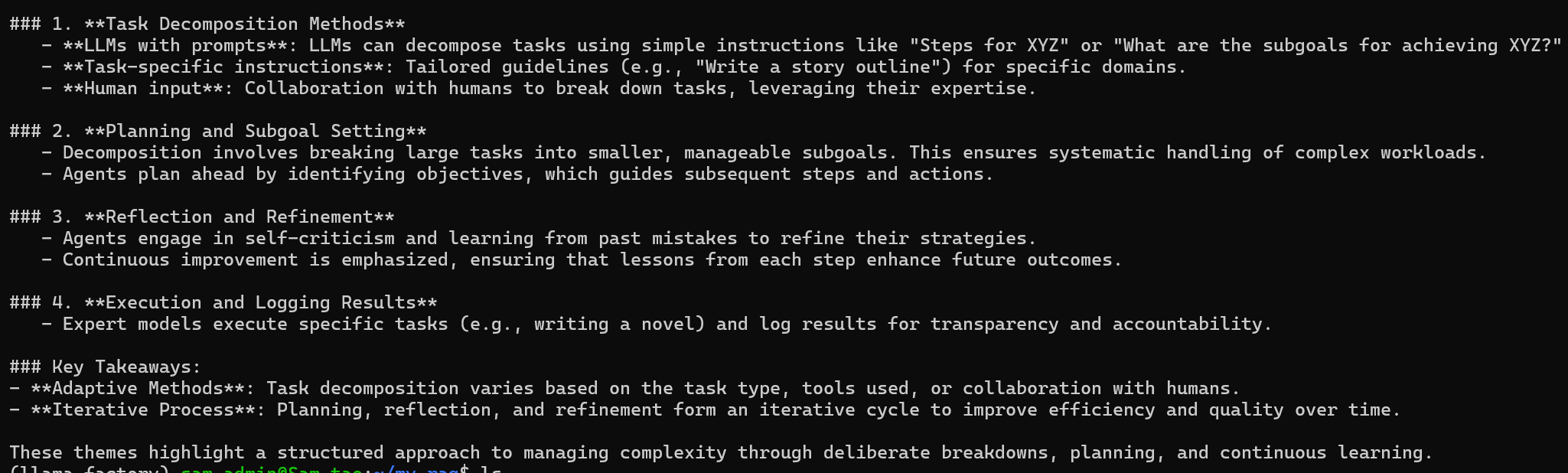

question = "What are the approaches to Task Decomposition?"

docs = vectorstore.similarity_search(question)

print(chain.invoke(docs)

简单QA

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_chroma import Chroma

from langchain_ollama import OllamaEmbeddings

from langchain_ollama import ChatOllama

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnablePassthrough

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

local_embeddings = OllamaEmbeddings(model="nomic-embed-text")

vectorstore = Chroma.from_documents(documents=all_splits, embedding=local_embeddings)

RAG_TEMPLATE = """

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

<context>

{context}

</context>

Answer the following question:

{question}"""

rag_prompt = ChatPromptTemplate.from_template(RAG_TEMPLATE)

model = ChatOllama(

model="psychology:latest",

)

# 将传入的文档转换成字符串的形式

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

chain = (

RunnablePassthrough.assign(context=lambda input: format_docs(input["context"]))

| rag_prompt

| model

| StrOutputParser()

)

question = "What are the approaches to Task Decomposition?"

docs = vectorstore.similarity_search(question)

# Run

print(chain.invoke({"context": docs, "question": question}))结果:

Task decomposition can be achieved through (1) simple prompts to LLMs like "Steps for XYZ" or "What are the subgoals for achieving XYZ?", (2) task-specific instruction guidelines, such as writing a story outline, and (3) human input to define and refine subtasks.

带有检索的QA

带有语义检索功能的 QA 应用(本地 RAG 应用),可以根据用户问题自动从向量数据库中检索语义上最相近的文档片段:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_chroma import Chroma

from langchain_ollama import OllamaEmbeddings

from langchain_ollama import ChatOllama

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnablePassthrough

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

local_embeddings = OllamaEmbeddings(model="nomic-embed-text")

vectorstore = Chroma.from_documents(documents=all_splits, embedding=local_embeddings)

RAG_TEMPLATE = """

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

<context>

{context}

</context>

Answer the following question:

{question}"""

rag_prompt = ChatPromptTemplate.from_template(RAG_TEMPLATE)

model = ChatOllama(

model="psychology:latest",

)

# 将传入的文档转换成字符串的形式

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

retriever = vectorstore.as_retriever()

qa_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| rag_prompt

| model

| StrOutputParser()

)

question = "What are the approaches to Task Decomposition?"

print(qa_chain.invoke(question))结果:

The approaches to Task Decomposition include (1) simple prompting by LLMs, such as "Steps for XYZ" or "What are the subgoals for achieving XYZ?" (2) task-specific instructions tailored to particular goals, like writing a story outline, and (3) incorporating human inputs for collaborative refinement.

- openvpn linux客户端使用

51777

- H3C基本命令大全

51465

- openvpn windows客户端使用

41866

- H3C IRF原理及 配置

38644

- Python exit()函数

33134

- openvpn mac客户端使用

30122

- python全系列官方中文文档

28799

- python 获取网卡实时流量

23790

- 1.常用turtle功能函数

23706

- python 获取Linux和Windows硬件信息

22073

- Ubuntu本地部署dots.ocr

136°

- Python搭建一个RAG系统(分片/检索/召回/重排序/生成)

2303°

- Browser-use:智能浏览器自动化(Web-Agent)

2996°

- 使用 LangChain 实现本地 Agent

2504°

- 使用 LangChain 构建本地 RAG 应用

2448°

- 使用LLaMA-Factory微调大模型的function calling能力

3062°

- 复现一个简单Agent系统

2449°

- LLaMA Factory-Lora微调实现声控语音多轮问答对话-1

3264°

- LLaMA Factory微调后的模型合并导出和部署-4

5381°

- LLaMA Factory微调模型的各种参数怎么设置-3

5199°

- 姓名:Run

- 职业:谜

- 邮箱:383697894@qq.com

- 定位:上海 · 松江